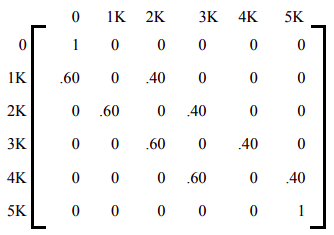

Markov chain with absorbing states. If every state can reach an absorbing state then the Markov chain is an absorbing.

Periodicity For Markov Chain Cross Validated

Absorbing Markov Chain Wikipedia

Markov Chain Expected Times To Absorption Mathematics Stack Exchange

In order for it to be an absorbing Markov chain all other transient states must be able to reach the absorbing state with a probability of 1.

What is absorbing markov chain. For Markov systems or Open Markov models which are generalizations of the Markov chain an introductory review paper is 6. For Markov Chains on general state space an excellent reference is 5. C The probabilistic abacus for absorbing chains 53 Index 56 Topics marked are non-examinable.

A continuous-time process is called a continuous-time Markov chain CTMC. However this is only one of the prerequisites for a Markov chain to be an absorbing Markov chain. A discrete-time stochastic process X n.

A state i is called absorbing if it is impossible to leave this state. 2 1MarkovChains 11 Introduction This section introduces Markov chains and describes a few examples. A useful property of the transition matrix is that it can represent the long-term probability of ending up in one state given a starting state by just taking a large exponent of the matrix itself.

A Markov chain is known as irreducible if there exists a chain of steps between any two states that has positive probability. The rat in the open maze yields a Markov chain that is not irreducible. Richard Weber October 2011 iii.

On a diverse simulated benchmark MARGARET out-performed state-of-the-art methods in recovering. We survey common methods used to nd the expected number of steps needed for a random walker to reach an absorbing state in a Markov chain. Each time state iis visited it will be revisited with the same probability f.

The rat in the closed maze yields a recurrent Markov chain. A countably infinite sequence in which the chain moves state at discrete time steps gives a discrete-time Markov chain DTMC. The only possibility to return to 3 is to do so in one step so we have f3 1 4 and 3 is transient.

If there exists some n for which p ij n 0 for all i and j then all states communicate and the Markov chain is irreducible. There are two communication classes C 1 1234 C 2 0. However for Markov the price to pay for this time-inconsistent and suboptimal monetary policy is a persistent lira depreciation which acts as the main shock absorbing variable.

A row with a 1 on the matrixs diagonal is an absorbing state meaning that once the Markov chain is in that state it will never leave it. The stationary distribution gives information about the stability of a random process and in certain cases describes the limiting behavior of the Markov chain. We say that Xnn0 is a Markov chain with initial distribution.

If a Markov chain is not irreducible it. Starts in one initial state and eventually ends in absorbing or final state It has been addressed by many authors in the medical field such as. Furthermore only Appendix A is examinable.

Absorbing states are crucial for the discussion of absorbing Markov chains. A faster Monte-Carlo algorithm for dynamical studies in Computer Simulation Studies in Condensed-Matter Physics VII edited by D. As it is an absorbing state.

Novotny Monte Carlo with absorbing Markov chains. How to solve Markov chain hitting probabilities with linear algebra. A state is known as recurrent or transient depending upon.

Discrete-time Board games played with dice. Markov property once the chain revisits state i the future is independent of the past and it is as if the chain is starting all over again in state ifor the rst time. However for Markov the price to pay for this time-inconsistent and suboptimal monetary policy is a persistent lira depreciation which acts as the main shock absorbing variable.

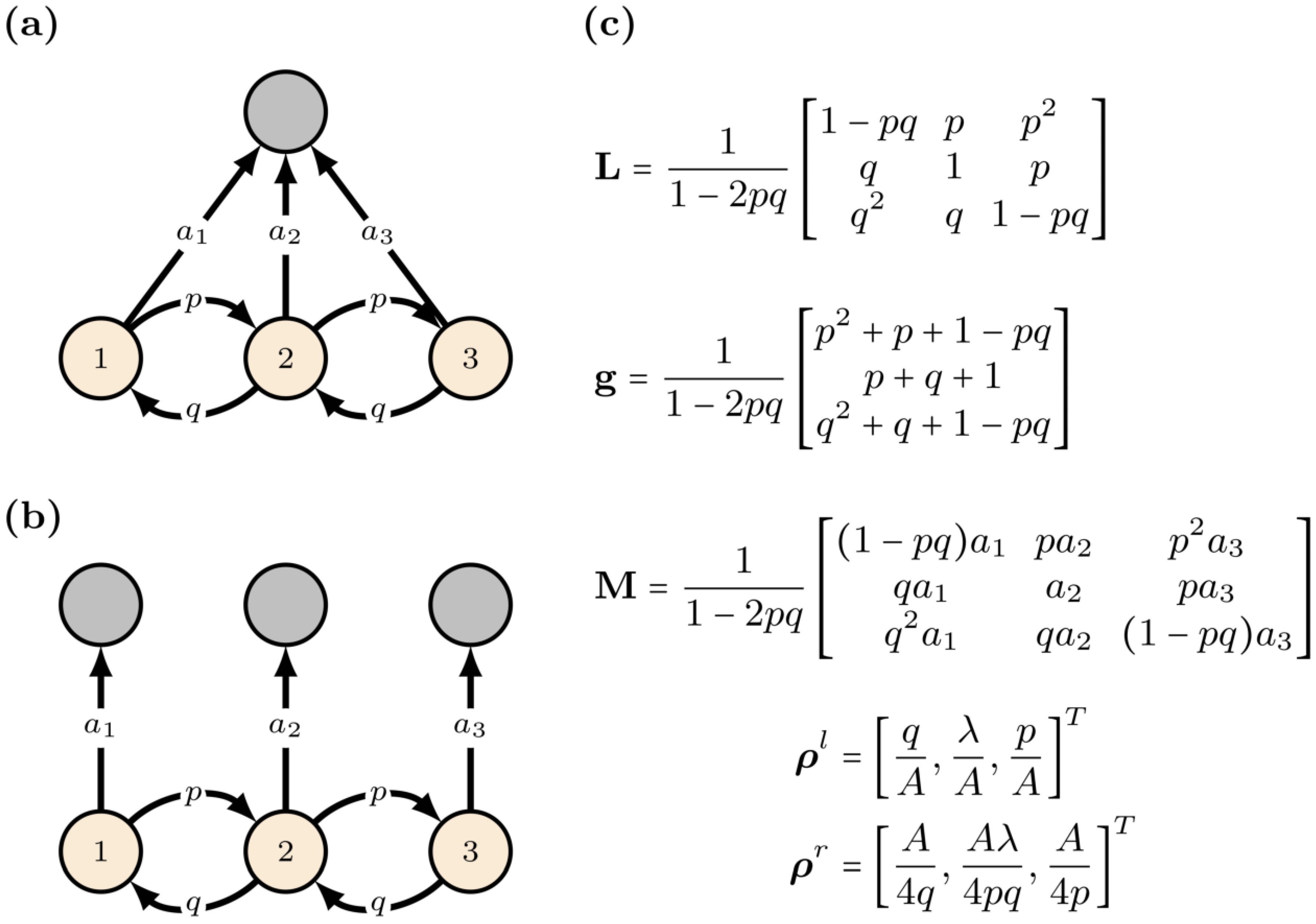

The process is at the present state. A Markov chain or Markov process is a stochastic model describing a sequence of possible events in which the probability of each event depends only on the state attained in the previous event. An absorbing Markov chain is a Markov chain in which it is impossible to leave some states once entered.

Neous and non-homogeneous Markov chains and processes are 13 and 4 Chapter 3. Markov chain - transition matrix - expected value. Markov chain - transition matrix - average return time.

If all states in an irreducible Markov chain are ergodic then the chain is said to be ergodic. Solving a system of linear equations using a transition matrix and. Markov Chains - 10 Irreducibility A Markov chain is irreducible if all states belong to one class all states communicate with each other.

Schüttler Springer-Verlag Berlin 1994 pp. MARGARET utilizes the inferred trajectory for determining terminal states and inferring cell-fate plasticity using a scalable absorbing Markov Chain model. To create a switching mechanism governed by threshold transitions and threshold variable data for.

An absorbing state is a state that is impossible to leave once reached. Clearly if the state space is finite for a given Markov chain then not all the states can be. C 1 is transient whereas C 2 is recurrent.

A game of snakes and ladders or any other game whose moves are determined entirely by dice is a Markov chain indeed an absorbing Markov chainThis is in contrast to card games such as blackjack where the cards represent a memory of the past movesTo see the difference consider the probability for a certain event in the game. Estes et al1 used multistate Markov chains to model the epidemic of nonalcoholic fatty liver disease. Ergodic Markov chains have a unique stationary distribution and absorbing Markov chains have stationary distributions with nonzero elements only in absorbing states.

To determine the classes we may give the Markov chain as a graph in which we only need to depict edges which signify nonzero transition probabilities their. P_xy 0 The logic here is that PrX_t1 in B mid X_t in A can be written as a sum of terms PrX_t1 in B mid X_t x PrX_t x mid X_t in A which is a weighted average of the conditional probabilities PrX_t1 in B mid X_t x. Values range from 0 to 1 inclusive.

Therefore the state i is absorbing if p ii 1 and p ij 0 for i j. After creating a dtmc object you can analyze the structure and evolution of the Markov chain and visualize the Markov chain in various ways by using the object functionsAlso you can use a dtmc object to specify the switching mechanism of a Markov-switching dynamic regression model msVAR. An absorbing state i i i is a state for which P i i 1 P_ii 1 P i i 1.

N 0 on a countable set S is a collection of S-valued random variables defined on a probability space ΩFPThe Pis a probability measure on a family of events F a σ-field in an event-space Ω1 The set Sis the state space of the process and the. Moreover f1 1 because in order never to return to. 大家应该还记得马尔科夫链Markov Chain了解机器学习的也都知道隐马尔可夫模型Hidden Markov ModelHMM它们具有的一个共同性质就是马尔可夫性无后效性也就是指系统 的下个状态只与当前状态信息有关而与更早之前的状态无关.

We can always say that the probability is at least min_x in A sum_y in B p_xy ge alpha cdot min_x in A y in B. The latest Lifestyle Daily Life news tips opinion and advice from The Sydney Morning Herald covering life and relationships beauty fashion health wellbeing. Equilibrium probabilities in simple 3-state Markov chain.

10 4 Absorbing Markov Chains Mathematics Libretexts

Absorbing Markov Chain Wikipedia

L26 6 Absorption Probabilities Youtube

11 Markov Chains Jim Vallandingham Ppt Video Online Download

An Absorbing Markov Chain Model For Problem Solving

Getting Started With Markov Chains Revolutions

Prob Stats Markov Chains 21 Of 38 Absorbing Markov Chains Example 1 Youtube

Entropy Free Full Text On The Structure Of The World Economy An Absorbing Markov Chain Approach